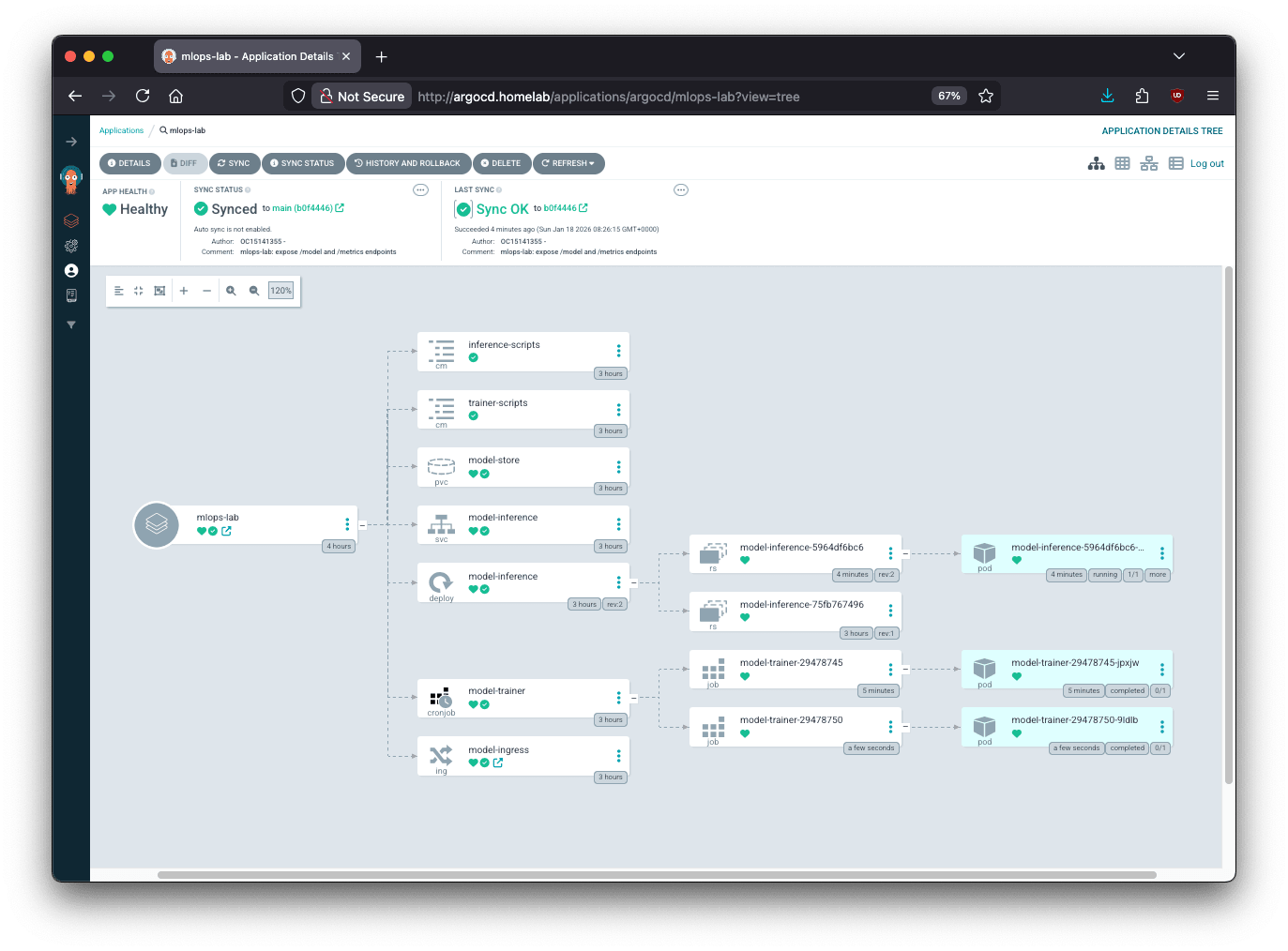

MLOps Lab: Argo CD

A minimal MLOps-style pipeline deployed via Argo CD: a trainer CronJob writes model artifacts to a local-path PVC and an inference Deployment serves predictions over HTTP through ingress-nginx + Pi-hole DNS. Built to learn scheduling/storage topology, labels/selectors, and safe GitOps workflows.

Overview

This project is a deliberately small, end-to-end Train → Store → Serve pipeline designed to teach the operational foundations of MLOps on Kubernetes.

A scheduled trainer (CronJob) writes a model artifact to persistent storage (a local-path PVC), and an inference service (Deployment) mounts the same PVC and serves HTTP predictions. The workload is exposed through ingress-nginx and reachable through DNS.

Crucially, the cluster uses node-local persistence, so the pipeline was built to respect storage topology (PVC binding + node pinning), reflecting real-world scheduling constraints rather than “happy path” demos.

What it does

Train

- A

CronJobruns on a schedule and executes a tiny trainer script. - The trainer writes:

/models/model.json/models/version.txt

Store

- Artifacts are stored in a

PersistentVolumeClaimusinglocal-path. - Because the PV is node-local, both trainer and inference are pinned to the same node via

nodeSelector.

Serve

- An inference

Deploymentmounts the PVC and readsmodel.json. - It exposes:

GET /healthzGET /predict?x=<value>

Expose

IngressroutesHost: model.homelab→Service→ inference pod.- Pi-hole provides stable internal DNS, so services are reachable by name rather than NodePorts.

Demonstrates

- GitOps workflow with Argo CD: desired state in Git, reconciled into the cluster with clear diff/health/sync visibility.

- Kubernetes wiring: labels/selectors → Service endpoints → Ingress routing; no “magic”, just deterministic plumbing.

- Storage topology awareness: learned and handled

local-pathconstraints (WaitForFirstConsumer, node pinning, PVC scheduling deadlocks). - Safe isolation: deployed into a dedicated namespace (

mlops-lab) to avoid impacting existing services. - Operational troubleshooting: used

kubectl describe, PVC events, scheduler events, endpoints, and ingress host routing to debug failures end-to-end. - Runbook discipline: documented prerequisites, verification steps, and failure modes like a production incident playbook.